Data centers and data center cooling systems are critical, energy-hungry infrastructures that operate around the clock. They provide computing functions that are vital to the daily operations of top economic, scientific, and technological organizations around the world.

The amount of energy consumed by these centers is estimated at 3% of the total worldwide electricity use, with an annual growth rate of 4.4%. Naturally, this has a tremendous economic, environmental, and performance impact that makes the energy efficiency of cooling systems one of the primary concerns for data center designers, ahead of the traditional considerations of availability and security [1]. This article will discuss the ASHRAE data center standards that correlate with these concerns.

ASHRAE data center standards and accompanying studies also show that the largest energy consumer in a typical data center is the cooling infrastructure (50%), followed by servers and storage devices (26%) [2]. Thus, in order to control costs while meeting the increasing demand for data center facilities, designers must make the cooling infrastructure and its energy efficiency their primary focus; introducing ASHRAE data center standards.

Data Center Cooling Systems (HVAC): Which Standards to Follow?

Until recently, this was a challenging task due to the fact that the industry standards used to assess the energy efficiency of data centers and server facilities were inconsistent. To establish a governing rule for data center HVAC energy efficiency measurements, power usage effectiveness (PUE) was introduced in 2010. However, it served as a performance metric rather than a design standard and still failed to address relevant design components, so the problem remained.

New Energy Efficiency Standard ASHRAE 90.4

This led the American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE), one of the main organizations responsible for developing guidelines for various aspects of building design, to develop a new standard that would be more practical for the data center industry. the devised ASHRAE data center standards, ASHRAE 90.4, has been in development for several years and was published in September 2016, bringing a much-needed standard to the data center community. According to ASHRAE, this new data center HVAC standard, among other things, “establishes the minimum energy efficiency requirements of data centers for design and construction, for the creation of a plan for operation and maintenance and for utilization of on-site or off-site renewable energy resources.” [3]

Overall, this new ASHRAE 90.4 standard contains recommendations for the design, construction, operation, and maintenance of data centers. This ASHRAE data center standard explicitly addresses the unique energy requirements of data centers as opposed to standard buildings, thus integrating the more critical aspects and risks surrounding the operation of data centers.

And unlike the PUE energy efficiency metric, the calculations in ASHRAE 90.4 are based on representative components related to design. Organizations need to calculate efficiencies and losses for different elements of the systems and combine them into a single number, which must be equal to or less than the published maximum figures for each climate zone.

How Computational Fluid Dynamics Can Help You Comply with ASHRAE 90.4

Any number of different cooling system design strategies or floor layout variations can affect the results, thereby changing efficiency, creating hotspots or altering the amount of infrastructure required for the design. Computational fluid dynamics (CFD) offers a method of evaluating new designs or alterations to existing designs before they are implemented in accordance with ASHRAE data center standards.

To learn how CFD can help you curb excessive energy consumption of your data center systems and comply with ASHRAE 90.4, watch this webinar:

Design Strategies to Reduce Data Center Energy Consumption

Designing a new data center facility or changing an existing one to maximize cooling efficiency can be a challenging task. Design strategies to reduce the energy efficiency of a data center include:

- Positioning data centers based on environmental conditions (geographical location, climate, etc.)

- Design decisions based on infrastructure topology (IT infrastructure and tier standards)

- Adapting best cooling system strategies

Improving the data center cooling system configuration is a key opportunity for the HVAC design engineer to reduce energy consumption. Some of the different cooling strategies that designers and engineers follow to conserve energy are:

- Air conditioners and air handlers

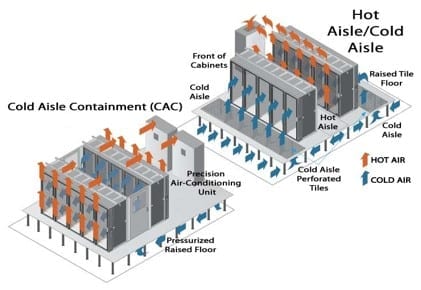

The most common types are an air conditioner (AC) or computer room air handler (CRAH) units that blow cold air in the required direction to remove hot air from the surrounding area. - Hot aisle/cold aisle

The cold air (or aisle) is passed to the front of the server racks and the hot air comes out of the rear side of the racks. The main goal here is to manage the airflow in order to conserve energy and reduce cooling cost. The image below shows the cold and hot aisles airflow movements in a data center. - Hot aisle/cold aisle containment

Containment of the hot/cold aisles is done mainly to separate the cold and hot air within the room and remove hot air from cabinets. The image below shows detailed airflow movement of cold and hot containment individually.

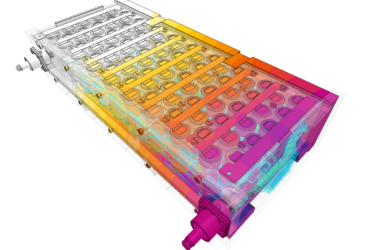

- Liquid cooling

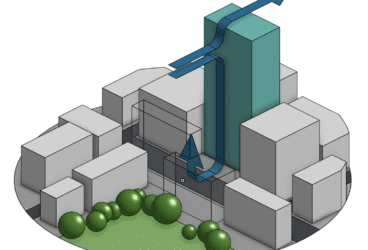

Liquid cooling systems provide an alternative way to dissipate heat from the system. This approach includes air conditioners or refrigerants with cold water close to the heat source. - Green cooling

Green cooling (or free cooling) is one of the sustainable technologies used in data centers. This could involve simply opening a data center window covered with filters and louvers to allow natural cooling techniques. This approach saves a tremendous amount of money and energy.

Identifying the right combination of these cooling techniques can be challenging. Here’s how CFD simulation can make this task easier.

Case Study: Improving Data Center Cooling Systems

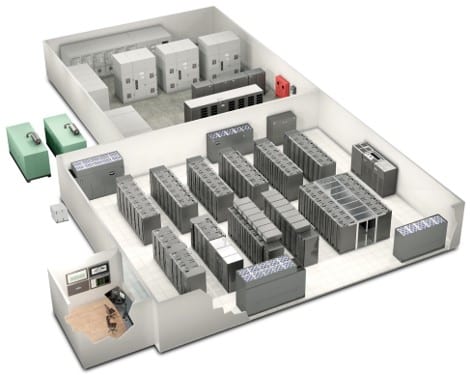

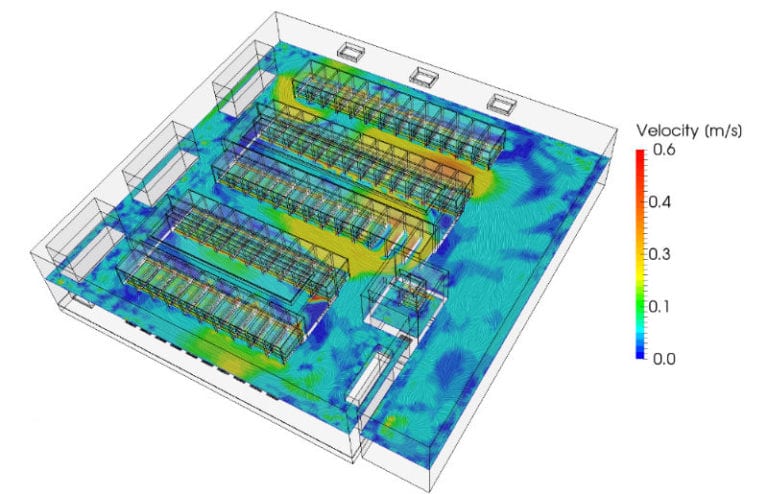

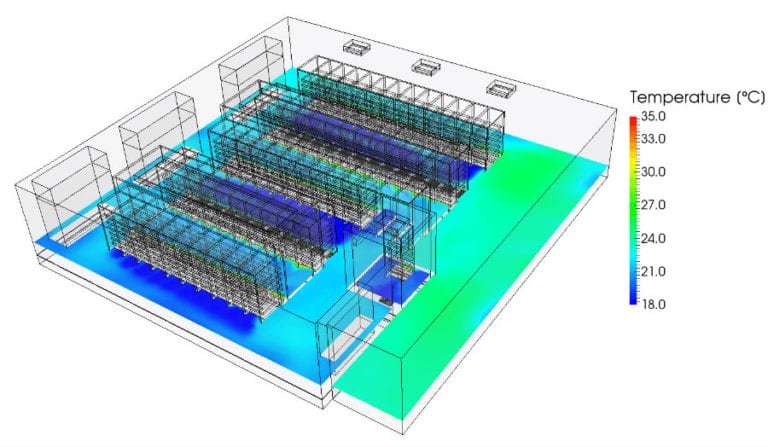

Computational fluid dynamics (CFD) can help HVAC engineers and data center designers to model a virtual data center and investigate the temperature, airflow velocity and pressure fields in a fast and efficient way. The numerical analysis presents both 3D visual contouring and quantitative data that is highly detailed yet easy to comprehend. Areas of complex recirculating flow and hotspots are easily visualized to help identify potential design flaws. Implementing different design decisions and strategies into the virtual model is relatively simple and can be simulated in parallel.

Data Center Cooling Systems: Project Overview

For the purpose of this study, we used a simulation project from the SimScale Public Projects Library that investigates two different data center cooling system designs, their cooling efficiency, and energy consumption. It can be freely copied and used by any SimScale user.

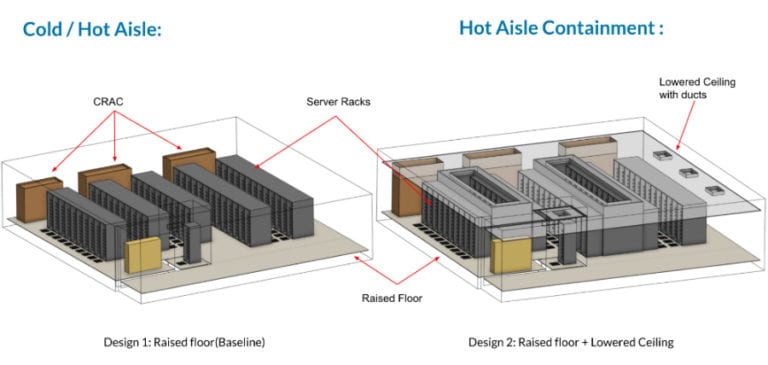

The two design scenarios are shown in the figure below:

The first design that we will consider uses a raised floor configuration, a cooling system that is frequently implemented in data centers. When this technique is used, cold air enters the room through the perforated tiles in the floor and in turn cools the server racks. Additionally, the second model uses hot aisle containment and lowered ceiling configuration to improve the cooling efficiency. We will use CFD to predict and compare the performance of the two designs and determine the best cooling strategy.

ASHRAE Data Center Standards: CFD Simulation Results

Baseline Design of the Data Center Cooling System

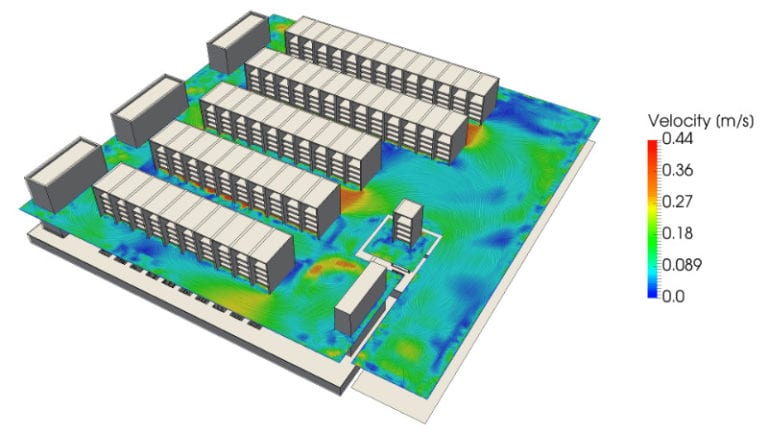

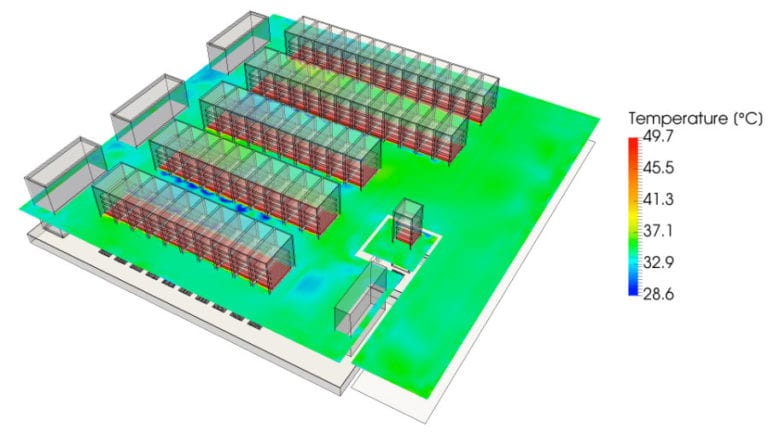

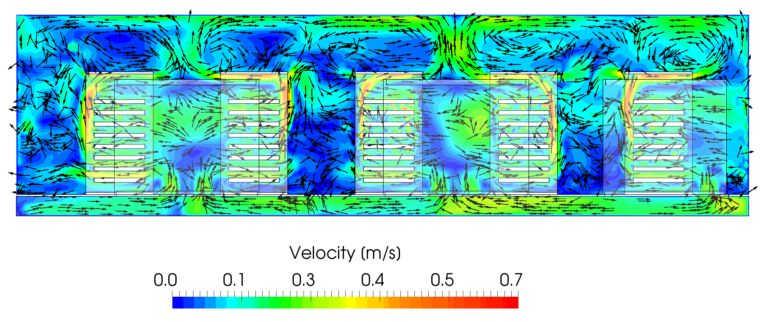

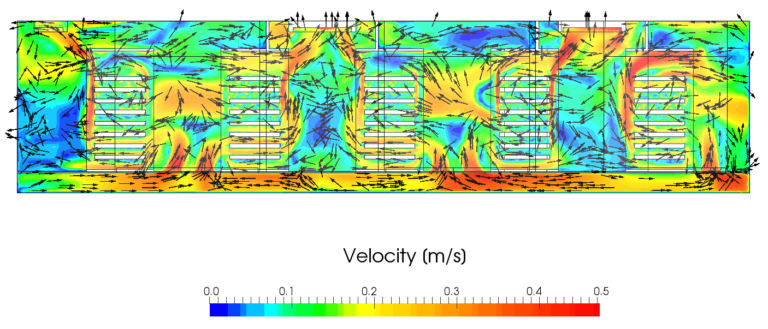

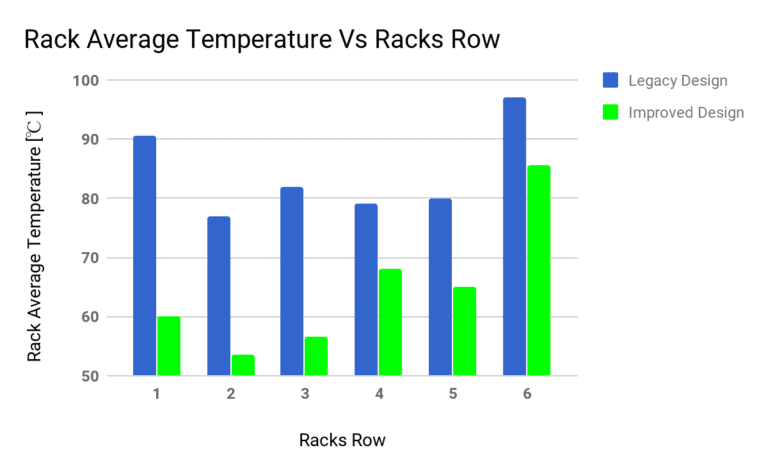

We investigated the temperature distribution and the velocity field inside of the server room for both design configurations. The post-processing images below show the velocity and temperature fields for the midsection of the baseline design. It can be observed that the hot air is present in the region of the cold aisle. This is due to the mixing of both the cold and hot aisles within the data center surrounding. The maximum velocity for this baseline design is at 0.44 m/s with a temperature range of 28.6 to 49.7 degrees Celsius.

The temperature contour shows that the zones between the two server racks are much more cooled in comparison with the others. The reasons for this can be understood by looking at the flow patterns.

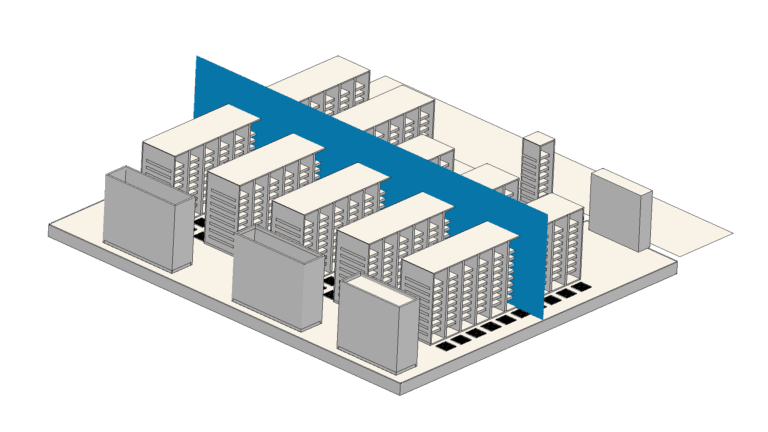

It is evident in the above image that in the server rows where inlets are present, the top of the racks sees a descending flow direction, instead of the desirable ascending flow from the inlets themselves. This is due to the strong recirculation currents driven by thermal buoyancy forces. This effect is very undesirable, as it reduces the cooling effectiveness specifically for the top shelves of the server racks. This effect could be minimized by allowing for proper airflow above the racks, either by increasing the ceiling height, placing more distributed outlets on the ceiling, or using some kind of active flow control system (fans) to direct the flow above the server racks. Or more simply, by preventing the hot air coming from the racks from freely circulating.

The temperature plot shows a significant temperature stratification which is to be expected given the large recirculation currents. We can observe that only the lowermost servers are receiving appropriate cooling.

Improved Design of the Data Center Cooling System

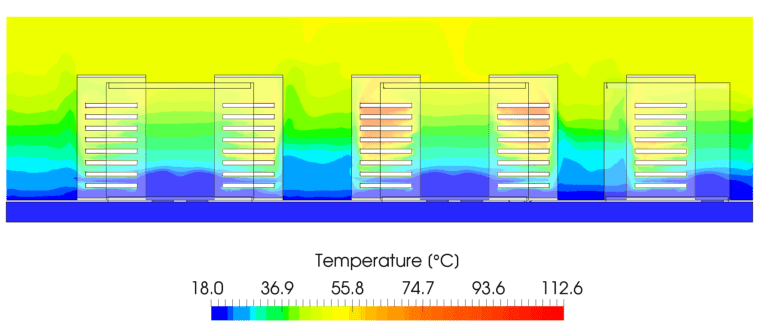

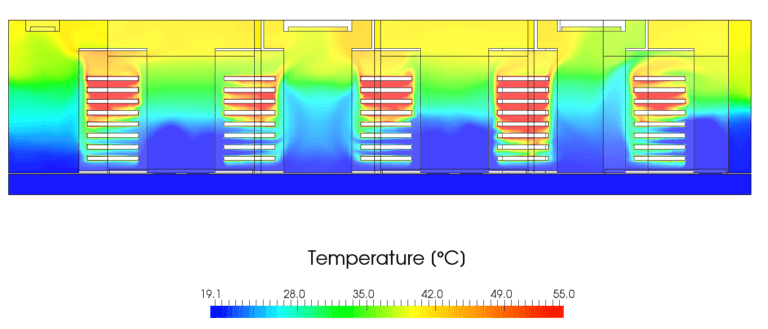

The next two pictures show the velocity and temperature distribution for the improved design scenario, middle section pane.

The velocity field shows that the flow is now driven to the outlets. This is due to the presence of containment on top of the racks. This also results in a better temperature distribution. The cold zones between the server racks are particularly extended.

The above image shows how the hot containment prevents the ascending flow from recirculating back to the inlet rows. This results in a cleaner overall flow pattern compared to what was seen in the previous design. It is also evident that the new design reduces temperature stratification, particularly in the contained regions between the servers.

The average temperature calculated for each rack is lower for the improved design by about 23%.

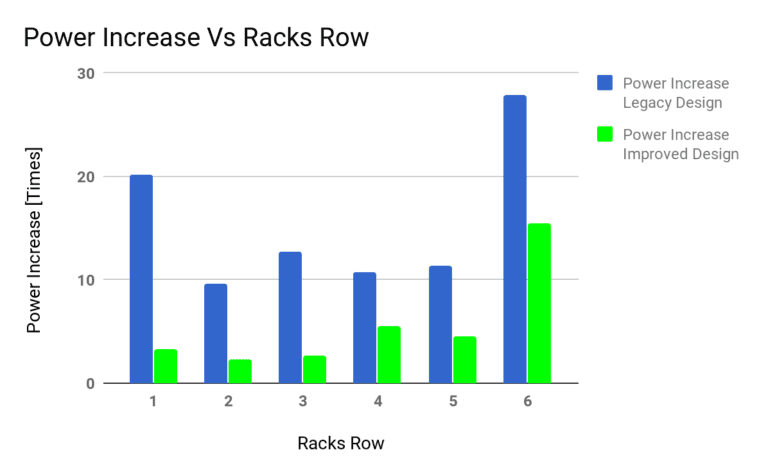

This is also reflected in the decrease in the amount of power that has to be supplied to the server to prevent overheating. On average, energy savings of 63% for the data center cooling system have been achieved.

Data Center Cooling Systems: Conclusions & Compliance with ASHRAE Data Center Standards

A typical data center may consume as much energy as 25,000 households. With the rising cost of electricity, increasing energy efficiency has become the primary concern for today’s data center designers.

This case study was just a small illustration of how CFD simulation can help designers and engineers validate their design decisions and accurately predict the performance of their data center cooling systems to ensure no energy is wasted, and in accordance with ASHRAE data center standards. The whole analysis was done in a web browser and took only a few hours of manual and computing time. To learn more, watch the recording of the webinar:

If you want to read more about ASHRAE data center standards and how CFD simulation helps engineers and architects improve data center HVAC systems along with overall building performance, download this free white paper.

References

- W. V. Heddeghem et al., Trends in worldwide ICT electricity consumption from 2007 to 2012, Comput. Commun., vol. 50, pp. 64–76, Sep. 2014

- Top 10 energy-saving tips for a greener data center, Info-Tech Research Group, London, ON, Canada, Apr. 2010, http://static.infotech.com/downloads/samples/070411_premium_oo_greendc_top_10.pdf

- ASHRAE Standard 90.4-2016 – Energy Standard for Data Centers, https://www.techstreet.com/ashrae/standards/ashrae-90-4-2016