NOTE: This is an example procedure to show how I was able to layer a particularly difficult layering requirement. It is not a way to fix all layering issues. However, I do believe the methods I present here can be used to solve a lot of layering problems that you may be having. The parameter values that are causing your problems are likely not the same as mine were in this case but here is at least a method to figure which parameters are causing your layers to disappear and then you can play with those parameters. It is an iterative process ![]()

Before I show my layering solution for this geometry I would like to complain a little first ![]()

Although the end result is mainly a one parameter value change (’ Min normalized cell determinant’), the road I took to discovering that parameter and its required value was long indeed and I was GREATLY hindered by not having access to the full Meshing Log.

I spent many hours watching real time pages of log pass by, even capturing the passing lines in the clipboard and assembling a full log in a Notepad ++ document many times.

From this, some of the things I think I learned that are relevant are (I could be wrong about a lot of things beyond this point but it did lead me to some success) :

- When adding layers, Snappy is called twice. First to create the unlayered mesh and then called a second time to layer it. This begs the question, why can’t we layer an existing mesh?

- When Snappy starts layering, it appears to create a perfect set of layers on each geometry face that has all the layers you asked for, effectively a 100 % inflated mesh. The layers are fully wrapped around ALL the features but they are eventually removed from a lot of them (darn). We just have to figure out how to stop as much of this face removal as possible and still leave the mesh with a reasonable quality for simulation use.

With that knowledge I continued watching the log in real time as those layers disappeared in the layer quality checking iterations that are performed on that perfect 100% layered mesh.

I finally noticed that the KEY iteration segment to watch was at the end ‘Layer addition iteration 0’.

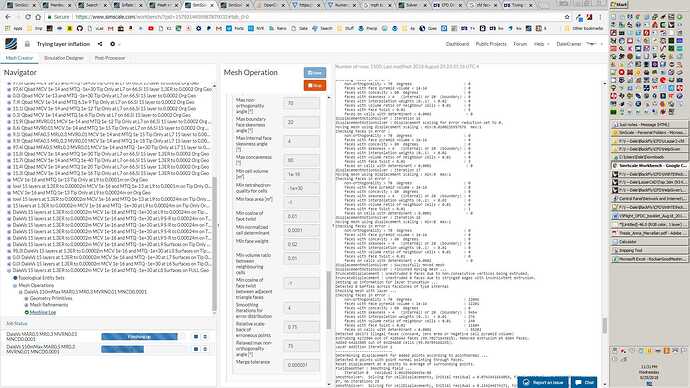

This is where I finally I had my AHA moment. I saw nearly 2,000,000 faces get discarded whose determinant was <0.001… Here is a screen capture of that location, unfortunately it is not the AHA moment when I saw 2,000,000 faces disappear but at least you can see 101,173 that disappear:

I realized that this end of ‘Layer addition iteration 0’ meshing log location is where most of the nice layers disappeared and this has now become the first place I look when I am having layering difficulties. It actually shows me which parameters are being failed. It is then up to me to decide whether relaxing these parameters will still give me a good enough mesh quality for simulation.

In my case I started trying a value of 1e-9 for ’ Min normalized cell determinant’ and I instantly retained a nearly 100% layering in the mesh that Snappy left for me.

Then I tried 1e-6 and still had nearly 100%, then 1e-4 and still had nearly 100% and then I left it there… Yeah (the default value of 1e-3 left only about 2% layering)

I have no idea if having a 1e-4 determinant cutoff vs the default 1e-3 value reduces my final mesh quality significantly but the mesh seemed to converge a simulation that was already in that project just fine.

The second most significant parameter to have correct is the ’ Min cell volume [m³]'. I chose my value of 1e-16 like this:

-

Find your layer creation parameters. Mine were 15 layers to an FLT (final layer thickness) of 0.0004m at an ER (expansion ratio) of 1.3. These were likely originally determined by a need for an EWD (estimated wall thickness or 1st layer thickness) of 0.00001m as calculated by the Online y+ Calculator. (NOTE: If your are in the process of trying to create layers on a geometry, make sure your ’ Bounding Box geometry primitive’ is divided into sufficiently sized but SQUARE cells in all x,y and z axis. This will create a Level 0 SQUARE mesh grid on all x,y and z faces of the Background Mesh Box).

-

Create an RLC (refinement level chart) in Excel. This shows RLEL (refinement level edge length) for all refinement levels you will use, like this (The ‘Axis Edge Length’ is the length of the ‘Background Mesh Box’ in the axis and the ‘# Cells along it’ is the meshing parameter ‘Number of cells in the ? direction’):

Note, best practice is to make sure the whole surface of the geometry is refined to a level where the RLEL is more or less equal to the FLT (final layer thickness). My FLT=0.0004 and RLEL6 was 0.000625. This is a ‘get you close’ to the correct level to surface refine to rule. In my case level 6 was chosen and did work, level 5 did not create any layers and level 7 made the mesh much larger without a significant increase in inflated layer percentage.

-

Calculate this value which I call MFLV (max first layer volume) as (RLEL#)(1st layer thickness)^2. In my case MFLV=(RLEL6)(EWD)^2 or (0.000625)*(.00001)^2 = 6.25e-14 . Then, I found that a MCV of about MFLV/1000 or 1e-16 was a good value. I determined that by real time watching the meshing log for the ‘Layer addition iteration 0’ meshing log location and making sure that not many cells less than my chosen MCV were being discarded from the layers. I hope that there is a better way to determine MCV but that is what I have for now.

The third most important parameter to have correct is the ’ Min tetrahedron-quality for cells’. This is a VERY confusing parameter. I ended up just having to turn it off for this case with a value of -1e+30.

There are a number of ‘Max Iteration…’ parameters that I changed from their defaults and I think I will continue to use the larger numbers because it is a pain to have a good layering setup stopped by too few iterations being done.

Here are all the Snappy parameters that I used (I hope I have discussed all the ones that were important in allowing me to get my 15 layers at this small EWD of 0.00001m):

CLICK IT TO SEE FULL IMAGE:

I wish the parameter name for each of those paramaters had the default value listed for it (beside the units bracket)

I will be editing this as I recall more details or fix something that I got wrong here based on my notes. I should post this before I lose all this work…

I hope that there is a long discussion following this post ![]() @1318980

@1318980

Dale