Okay, I’ve been doing some meshing benchmarking, and I’m not seeing a “scaling factor” when I crank up the cores. Is it possible to get some insight into why this wouldn’t be observed? Here is what I have (to get 82k elements):

2 cores: 240s (60s queue)

4 cores: 47s (no queue)

16 cores: 90s (17s queue)

32 cores: 73s (17s queue)

Here it is without the queue:

2 cores: 180s

4 cores: 47s

16 cores: 73s

32 cores: 56s

Now, I understand this is a very small model, and there are other factors at play, not just the act of calculating the mesh, so should I increase the model size an order of magnitude to see a trend?

Also, about this queue… can you share with us exactly what we are waiting for? Are all the cores the same, or are some cores specifically set up to work together? Meaning, if I choose 32 cores, do I have to wait for other people who are using those 32, or am I just waiting for the next 32 cores to free up? Final question - Is there ever a chance that less cores will solve faster (on total wall time, e.g. due to a queue) than more cores? Thanks!

@fastwayjim - great questions. I think my colleague @jprobst should be able to provide some insights here.

Hi @fastwayjim

thanks for providing the results of your benchmark!

Let me shed some light on your numbers. Whenever a meshing job is executed there is some overhead involved, for example copying data, creating the 3D visualizations for the browser, etc. Not all of these steps scale according to the number of cores. Since SimScale is a highly distributed system, some communication over networks takes place, which does usually not scale noticeably with the number of available processors.

All processing which is limited by the number and power of the CPUs is called CPU bound while all operations which have to wait on reading, writing or latency of drives or network connections are called I/O bound (input/output bound). Increasing the number of cores reduces the time of CPU spent on CPU bound processes while it has almost no impact on I/O bound processes.

The meshing jobs in your example are relatively short which means that the CPU bound fraction (which would benefit from more CPUs) is rather low, and the influence of the I/O bound actions is significant. Hence, your suspicion that a larger model size would lead to a better scaling is true. In case of a larger model you would have more CPU bound processing than I/O bound.

There is also such a thing as too many cores: if a rather small job is executed on too many CPUs, the processors lose more time on communicating and waiting on each other than doing actual work. In your case you seem to have hit the sweet spot with 4 cores. A larger job can easier benefit from more processors. For example, in the case of CFD, the optimum is usually somewhere around 100,000-250,000 cells per processor.

The queue also has to do with SimScale being a distributed system where computation takes place on many different machines. When a meshing or simulation job is issued by a user we first check if there is any free hardware where the job can be run. If yes, the job starts right away (it happened in the 4-core example). If not we wait for a little while to see if any hardware becomes free (this explains the other delays). And if after some time still no free hardware was available, we add more to the pool. This can take about 2-3 minutes. The job stays queued meanwhile.

I hope I was able to answer your questions. Have fun simulating!

Johannes

1 Like

Thank you for the insight, @jprobst, this is very helpful!

The 100k - 250k cells per core is a very useful number to know! I will bump up the element count and rerun. I would love to be able to see the scaling factor, so I know where the “sweet spots” are for certain model sizes. I think this would be very helpful to know.

Hi @fastwayjim

my colleage @rszoeke gave me a heads-up that my previous statement is not complete. It also depends on the meshing algorithm which is being used. My answer was more CFD oriented but I skipped on the FEA facts.

The meshing algorithms

- Hex-dominant automatic for internal flow (only CFD)

- Hex-dominant automatic for external flow (only CFD)

- Hex-dominant parametric (only CFD)

scale their CPU bound workload over multiple CPUs. The other meshing algorithms, namely

- Tetrahedral automatic

- Tetrahedral parametric

- Tetrahedral with local refinements

- Tetrahedral with layer refinements

are not doing this. They benefit only from the fact that more CPUs also bring more memory.

So, if you are using meshing algorithms from the latter group, the speedup will be negligible, even for larger models.

I hope I didn’t cause too much confusion here

Johannes

Ah, I see. So the only reason I would want more cores for an FEA mesh, is if I am running out of memory?

Is a multi-core mesher for FEA on the horizon?

That’s right. If the meshing runs out of memory we provide an error message in the event log. Should you see it the best is to try more cores.

Not yet, unfortunately. The software which we use for meshing does not have multi-core support (yet). Once it gets added we’re going to offer it asap.

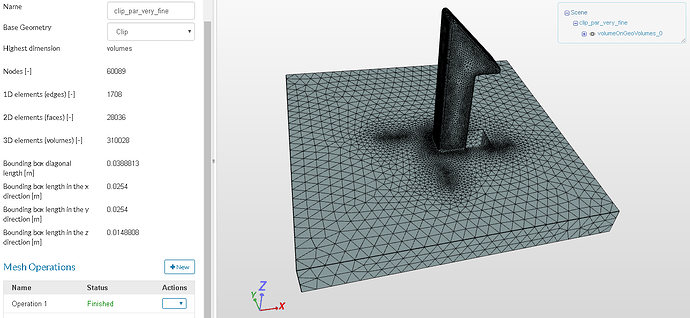

I recently noticed something interesting… This parametric mesh is showing a pattern (cyclical?) in the mesh that does not appear in the geometry. Is this a typical result from the meshing algorithm?